R2RGen generates spatially diverse manipulation demonstrations for training real-world policies, requiring only one human demonstration without simulator setup. Results: 1human demo + R2RGen > 25 human demos!

Abstract

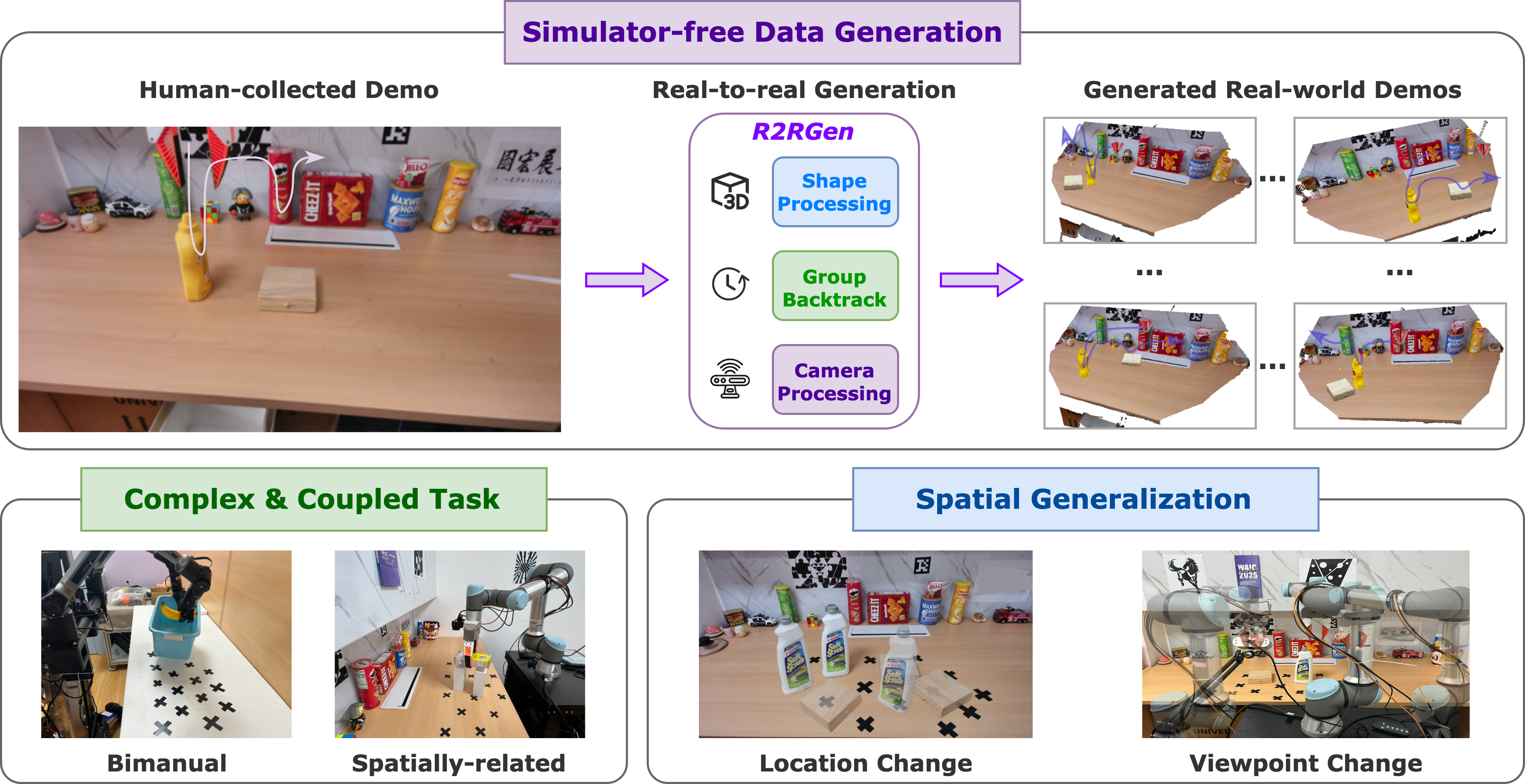

Towards the aim of generalized robotic manipulation, spatial generalization is the most fundamental capability that requires the policy to work robustly under different spatial distribution of objects, environment and agent itself. To achieve this, substantial human demonstrations need to be collected to cover different spatial configurations for training a generalized visuomotor policy via imitation learning. Prior works explore a promising direction that leverages data generation to acquire abundant spatially diverse data from minimal source demonstrations. However, most approaches face significant sim-to-real gap and are often limited to constrained settings, such as fixed-base scenarios and predefined camera viewpoints. In this paper, we propose a real-to-real 3D data generation framework (R2RGen) that directly augments the pointcloud observation-action pairs to generate real-world data. R2RGen is simulator- and rendering-free, thus being efficient and plug-and-play. Specifically, given a single source demonstration, we introduce an annotation mechanism for fine-grained parsing of scene and trajectory. A group-wise augmentation strategy is proposed to handle complex multi-object compositions and diverse task constraints. We further present camera-aware processing to align the distribution of generated data with real-world 3D sensor. Empirically, R2RGen substantially enhances data efficiency on extensive experiments and demonstrates strong potential for scaling and application on mobile manipulation.

Methods

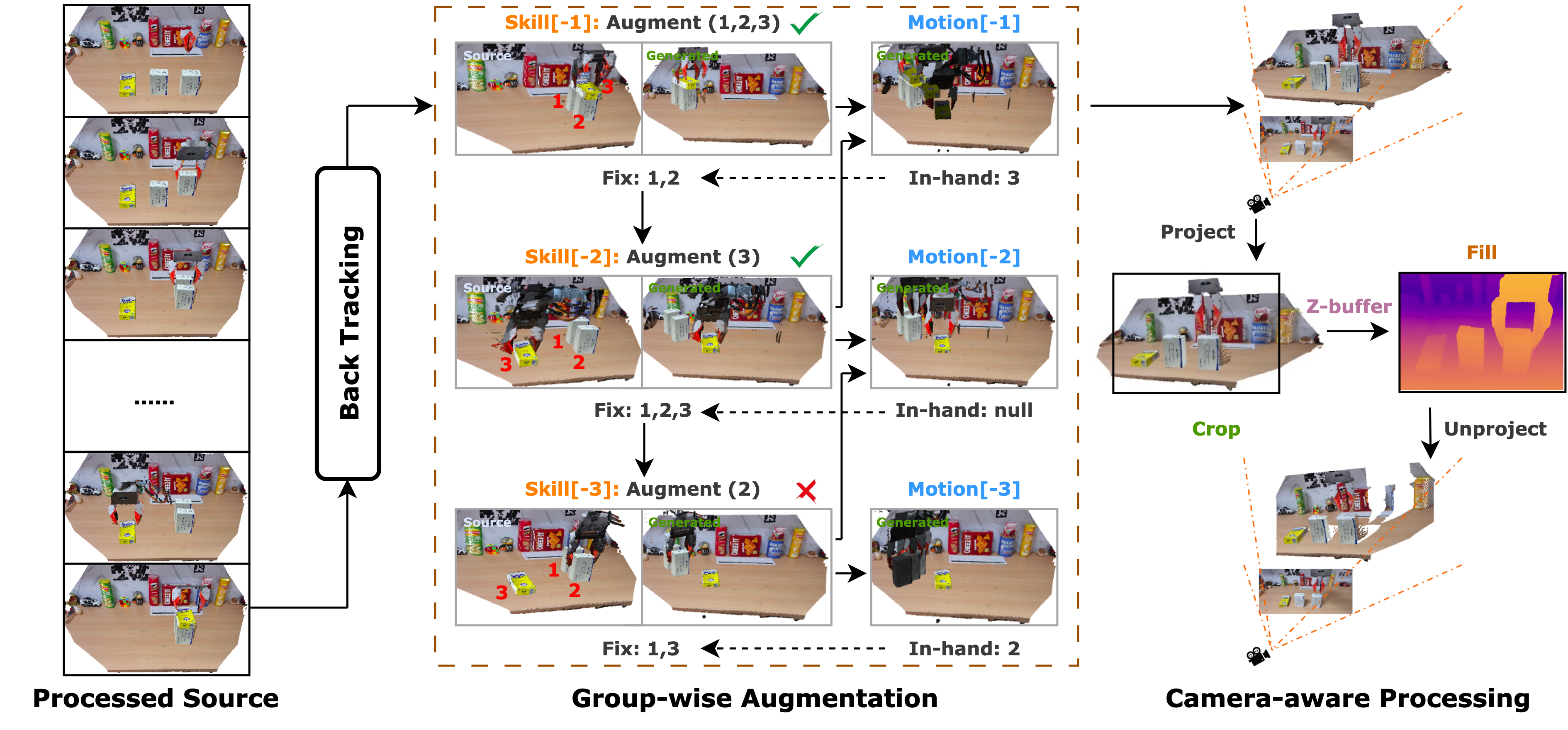

Pre-processing. We design an annotation system with shape-aware source pre-processing. After processing, the 3D scene is parsed into complete objects, environment and robot’s arm. The trajectory is parsed into interleaved motion and skill segments.

Pipeline of R2RGen. Given processed source demonstration, we backtrack skills and apply group-wise augmentation to maintain the spatial relationships among target objects, where a fixed object set is maintained to judge whether the augmentation is applicable. Then motion planning is performed to generate trajectories that connect adjacent skills. After augmentation, we perform camera-aware processing to make the pointclouds follow distribution of RGB-D camera. The solid arrows indicate the processing flow, while the dashed arrows indicate the updating of fixed object set.

Experiments

Source & Generated Demonstrations

R2RGen generates spatially diverse 3D observation-action sequences given one human demonstration. It ensures the pointclouds of objects and environment are reasonably distributed under arbitrary augmentation.

Evaluation Videos

With R2RGen, it is possible to train 3D policy that can generalizes to varying objects' locations and rotations as well as robot's viewpoints. All we need is one human demonstration and real-world data augmentation, no simulator or rendering required.

Extension & Application

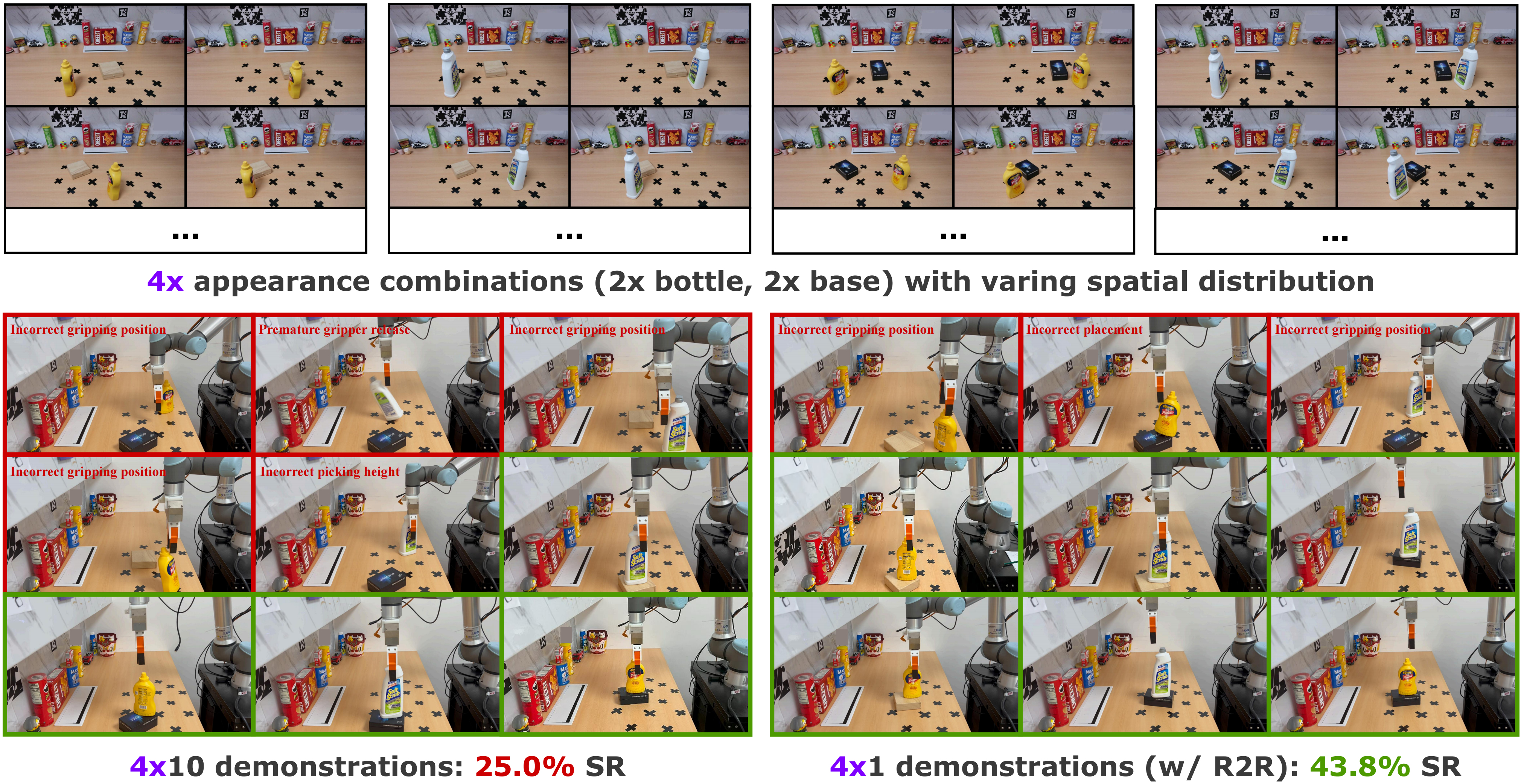

Extension: Appearance Generalization

Beyond spatial generalization, robotic manipulation tasks involve other forms of generalization, such as appearance generalization (i.e., adapting to novel object instances and environments) and task generalization. Among these, spatial generalization serves as the fundamental prerequisite for other generalization capabilities. Since this work focuses on single-task visuomotor policy learning, we investigate whether the spatial generalization enabled by R2RGen can further facilitate appearance generalization. As shown in figure below, we design a more challenging Place-Bottle task with four distinct bottle-base appearance combinations (2 bottle types x 2 base types). We observe that achieving both appearance and spatial generalization significantly increases data demand. Even with 40 human demonstrations (10 per bottle-base pair), the policy only reaches a 25% success rate. In contrast, using R2RGen, only 1 demonstration per bottle-base pair (4 in total) is needed to achieve a success rate of 43.8%, demonstrating its efficiency in handling combined generalization challenges.

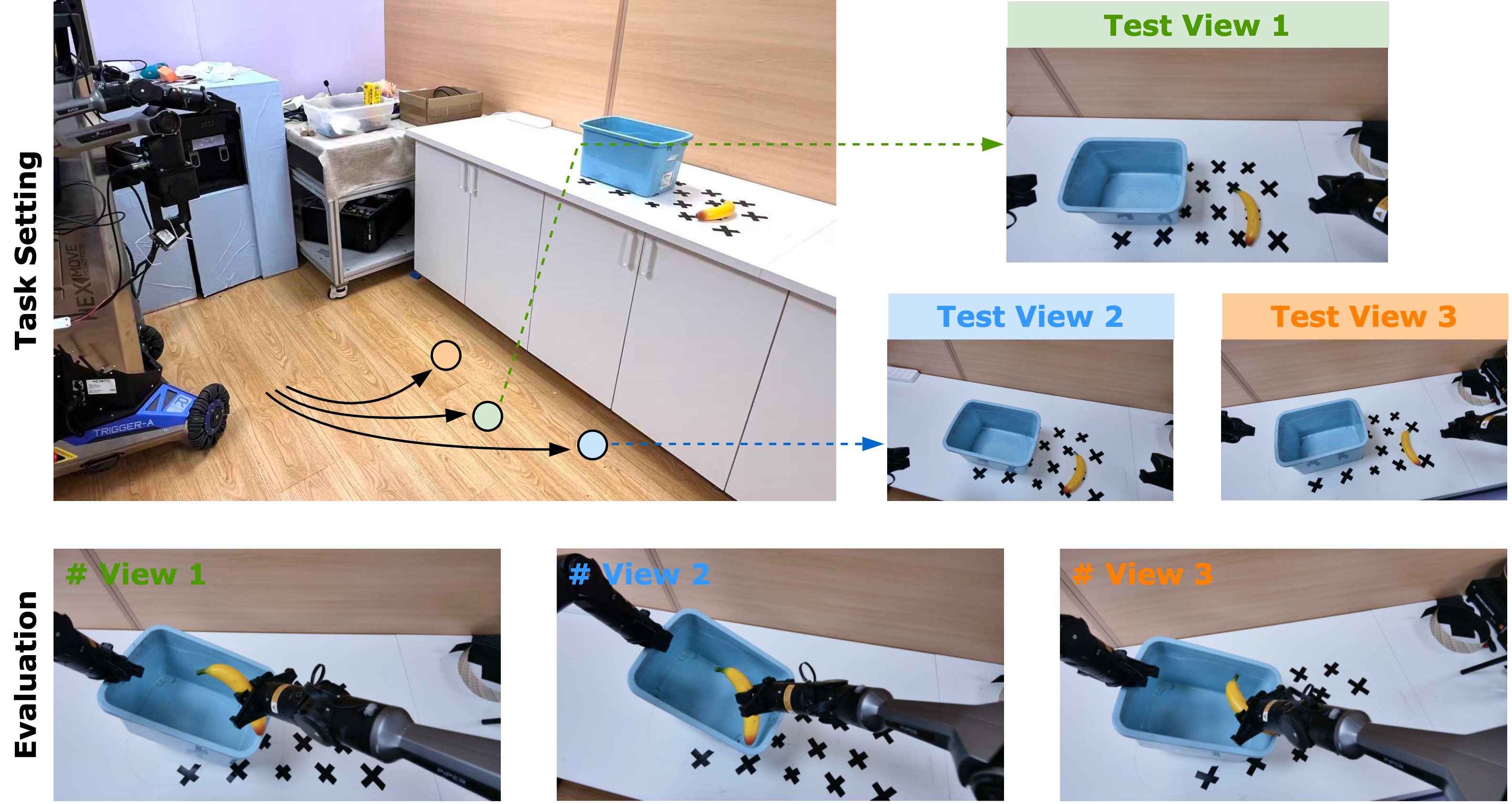

Application: Mobile Manipulation

R2RGen makes our 3D policy achieve strong spatial generalization across different viewpoints without camera calibration, so we can achieve mobile manipulation by simply combining a navigation system and a manipulation policy trained with R2RGen. Since the termination condition of navigation is relatively loose, the robot may stop at different docking point around the manipulation area, which imposes great challenges on the manipulation policy. According to figure below, using iDP3 trained with R2RGen, the policy successfully generalizes to different docking points with maximum distance larger than 5cm. Different from DemoGen (DP3) which requires a careful calibration of the camera pose to crop environment pointclouds, our method directly applies on raw RGB-D observations during both data generation and policy training / inference stages, which is more practical in real-world applications.